29% Pay For Their Own AI Tools at Work

Our AI survey statistic reveals hidden motivations for self-funding AI tools

Exploding Topics just reported on AI trends at home and at work.

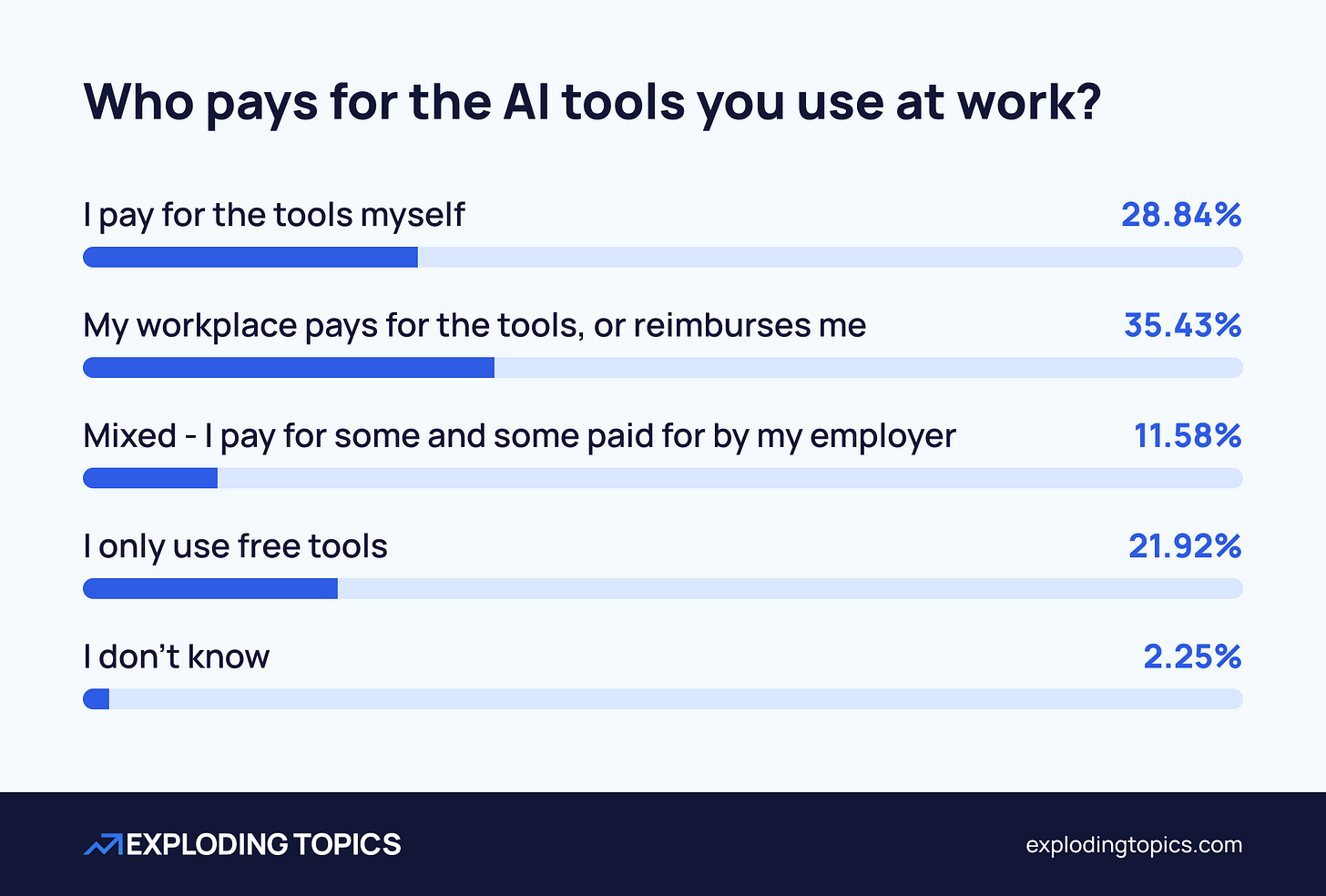

There were some eye-opening findings, like this one: at work, 29% of respondents said they pay for their own AI tools themselves.

At first glance, this seems crazy. Why would anyone self-fund software for work?

When you look into it more, this is a layered issue with lots of legitimate motivations.

I picked out this headline statistic because it sparked a very interesting discussion on Reddit in r/ChatGPT, and I wanted to discuss some of the comments here.

Why are people paying for their own AI tools at work?

It’s easy to assume that companies just don’t want to pay for these tools if they can make people feel they have to buy them themselves.

One poster on Reddit commented:

This is wild because my company doesn't just pay for it they mandate we use it and give us access to the max models. Seems idiotic not to pay.

From the perspective of the employee, the cost might be small enough to ignore. And sometimes, claiming for workplace expenses is a hassle anyway.

But $20/ month here and there added up over 500 employees? It’s easy to see why businesses aren’t exactly chasing people down to pay them back.

That said, this explanation is not the whole story, as the discussion revealed.

1. Secretly paying for AI to lighten the load

A significant number of people on Reddit said they’re willing to pay for their own AI tools to reduce their workload or work-related stress.

I finish my work quicker and live a less stress life for like what 20 bucks lol

The sentiment here is: yes, this is an out-of-pocket cost, but it pays for itself in terms of better work-life balance.

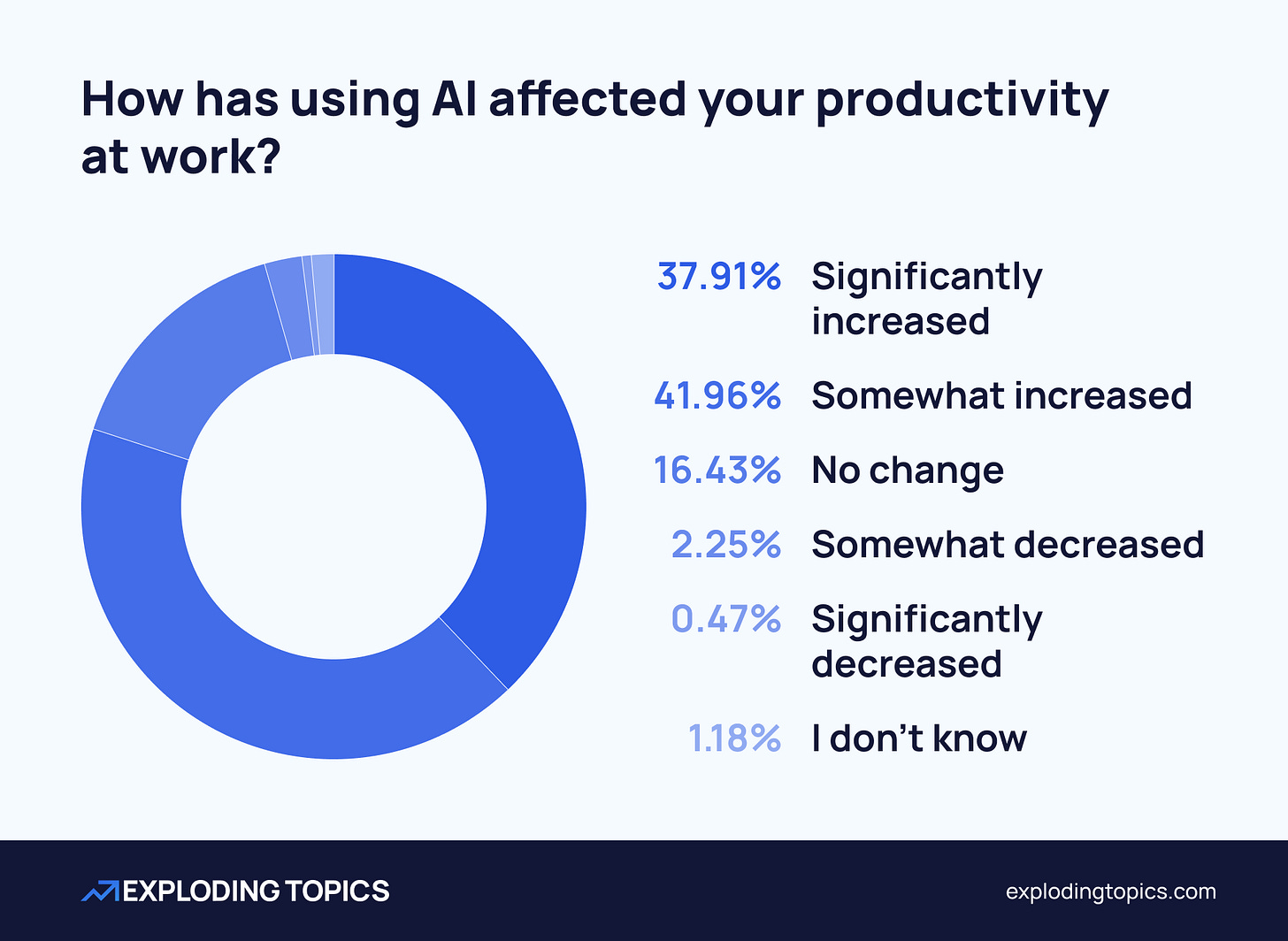

A significant majority of people feel using AI at work makes them more productive.

If you’re keeping the productivity benefits yourself, self-funding AI seems like a bargain.

(Of course, this is dependent on your employer never finding out.)

Linked to this, there are a number of people paying for AI tools to get ahead on their work for the purposes of getting a competitive or financial advantage.

This might involve working two remote jobs and getting paid for both at the same time.

But a number of questioners on Reddit pointed out (correctly) that self-reported productivity gains can be unreliable metrics.

Edit: the formidable Gary Marcus wrote about that here.

And it also depends on how you measure productivity.

A recent piece in the New York Times really resonated with me, particularly this:

During a corporate retreat in March, [Greg Schwartz, the chief executive of StockX] decided to push 10 senior leaders to play around with these tools, too. He gave everyone in the room, including the heads of supply chain, marketing and customer service, 30 minutes to build a website with the tool Replit and make a marketing video with the app Creatify.

“I’m just a tinkerer by trait,” Mr. Schwartz, 44, said. “I thought that was going to be more engaging and more impactful than me standing in front of the room.”

Here’s the question, though:

How many senior leaders went on to incorporate Replit or Creatify into their work?

Are employees succeeding with AI, or constantly taking two steps forward and one step back?

Are businesses solving problems with AI, or just mandating usage without clear goals?

This is a fascinating area. So many businesses are all-in on AI but haven’t seen measurable impact yet.

In fact, a study conducted by MIT found that the failure rate could be as high as 95%, and lack of training is a factor:

…for 95% of companies in the dataset, generative AI implementation is falling short. The core issue? Not the quality of the AI models, but the “learning gap” for both tools and organizations.

2. Protecting their own privacy or job security

This is an interesting one.

I’ve seen shared AI tools leak usage details to other team members. And I know that employees tend to distrust work resources because they assume their usage will be monitored. If BYOD is anything to go by, this is probably true.

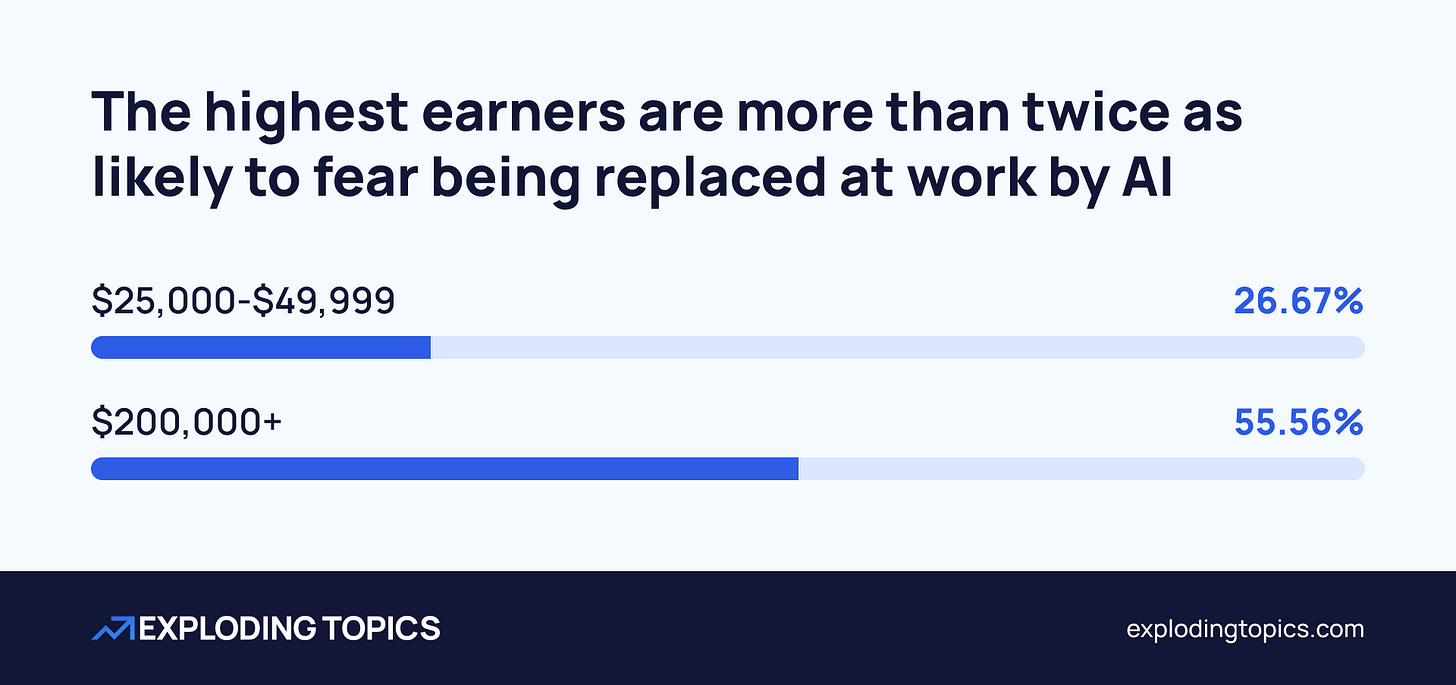

Linked to this, some people said they worried that they’d be replaced if they disclosed their use of AI at work. And that links right back to our findings.

So there’s strong evidence that people want to use AI, but fear they could essentially replace themselves if they disclose it.

3. Self-funding because companies won’t

Many people said that they don’t want to pay for their own AI tools. They’d much rather have the company invest in proper training and company-funded AI accounts.

There are two main sentiments here:

First, the lack of training and support is a missed opportunity for businesses who could be doing a lot more to adopt AI in the right way.

As this poster said, this isn’t new:

Most companies don’t actually invest in teaching employees how to properly use the software they already pay for. People learn just enough to get by, and that’s it.

Maybe some employers feel that leaving people to figure it out is a legitimate route to mass adoption.

Second, I picked up on the feeling that there’s a risk of exploitation. If you use AI, you’re asking for trouble:

I mean… if the company pays for it then they’re just gonna give you more work, if you don’t tell nobody you get some free time on the clock

If the business funds everything, it could become a slippery slope: workload increases and the employee pays the price by essentially designing their own AI replacement.

Finally, this parallel to teachers was incredibly insightful:

For thousands and thousands of teachers in the US, it's the start of a new school year and there are no supplies, no funds. Just instructions that their classroom is expected to be decorated and equipped with maps, posterboard, whatever teaching items the school requires. There are meetings all week. The school will be kept open afterhours and weekends so teachers can still get their assigned duties finished. By today, for some.

White collar and information workers are about to find out what the OG information workers have dealt with their entire careers.

What about the AI vegans?

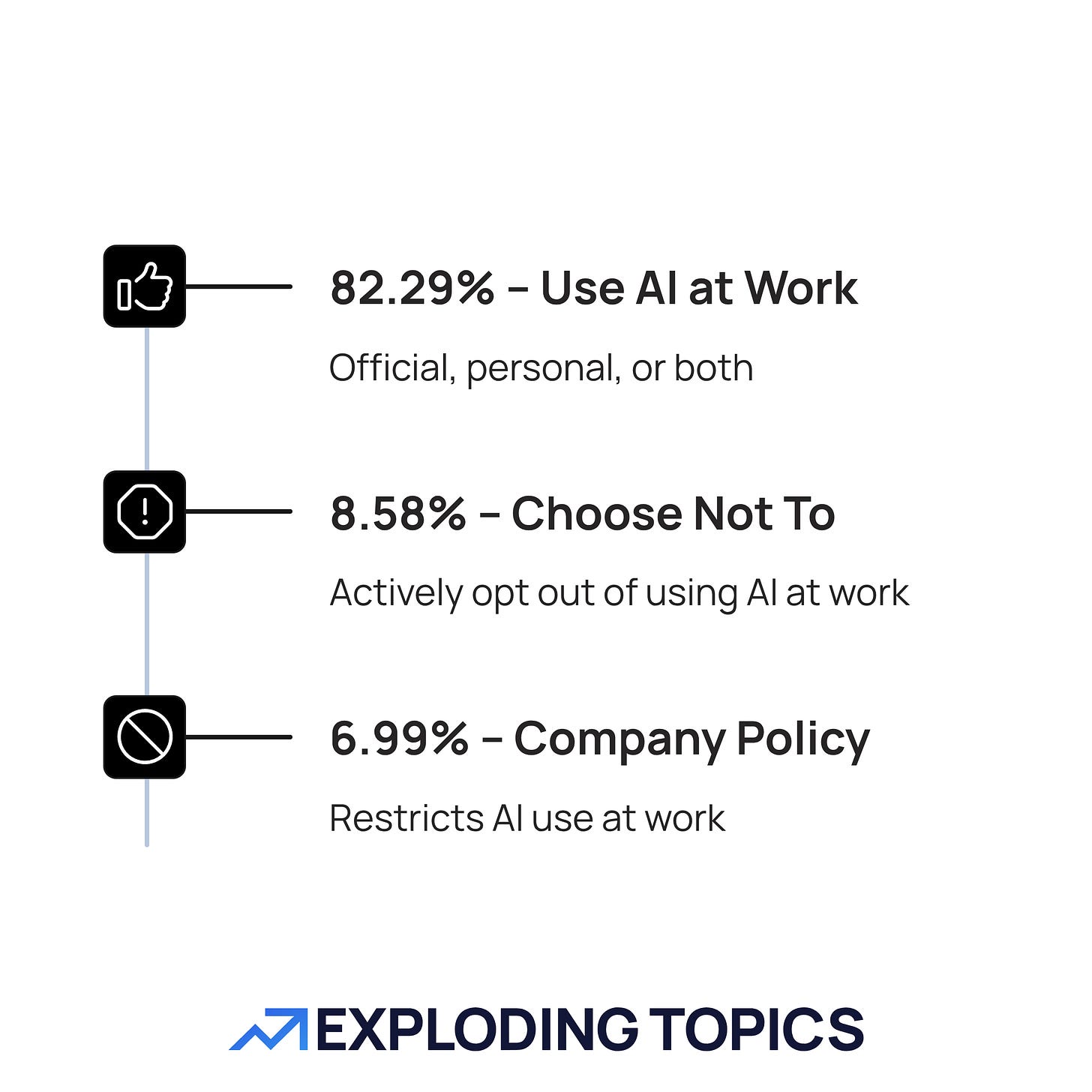

“AI veganism” is a new term describing people who consciously choose not to use AI at all.

People who refuse to use AI are represented in our survey: 8.58% said they choose not to use it. Unsurprisingly, there weren’t many comments about this in r/ChatGPT, which is why it wasn’t mentioned.

In the end, there are multiple motivations for paying for AI out of pocket.

We’re used to employers paying for essential software, like Slack and Google Workspace. With AI, the situation is different.

Some people think that you’ll have to pay to keep up with expectations or gain an advantage.

But I’ll close with an important point from one commenter. The more you have employees using unauthorized tools, the less secure your data is.

…this is a phenomenon called Shadow IT and it's actually usually harmful for companies, because it means their sensitive data is being shared and used to train other models. Only really matters for major corporations, though, and is probably a little paranoid anyway. But you don't enter an enterprise contract to provide something for your employees, you do it to have more control over your proprietary data.

In the end, that might be the motivator that changes the way AI tools are paid for at work.

Thanks to everyone who contributed to the discussion about our study. You can check it out here, and we’d love to know your thoughts in the comments.